A framework for rethinking the way we talk about the AI future.

AI is both a new technology and a new type of technology. It is the first technology that learns and that has the potential to outstrip its makers’ capabilities and develop independently.

As Large Language Models bring to life the realities of AI’s potential to operate at unprecedented, ‘human’ levels of sophistication, projections about its future have gained urgency. The dominant framework being applied to identify AI’s potential futures is 165 years old: Charles Darwin’s theory of evolution.

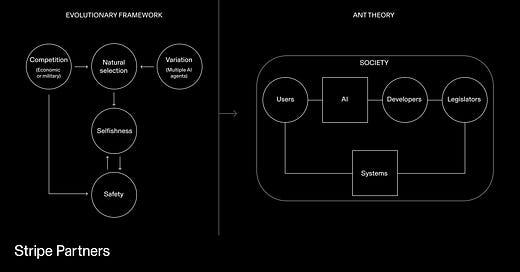

Darwin’s evolutionary framework is rendered most clearly in Dan Hendycks work for the Center for AI Safety which posits a future where natural selection could cause the most influential future AI agents to have selfish tendencies that might see AI’s favour their own agendas over the safety of humankind.

The choice of Natural Selection as a framework makes sense given AI’s emerging status as a quasi-sentient, highly adaptive technology that can learn and grow. The choice is a response to the limitations inherent in existing models for technological adoption which treat technologies as inert tools that only come to life when used by people.

The risk in applying this lens to AI is that it goes too far in assigning independent agency to AI. Estimates on the timing of the emergence of ‘Artificial General Intelligence’ vary, but spending some time with the current crop of Generative AI platforms confirms the view that AI’s with intelligences that are closer to humans are some way off. In the interim using natural selection as a lens to understand AI positions humans as further out of the developmental loop than is actually the case. Competitive forces whether market or military will shape AI's development, but these will not be the only forces at play and direct interaction with humans will be the principal driver for AI’s progress in the near term.

The framework

A year ago we wrote about the opportunity to reframe the impact of AI on organisations through the lens of Actor Network Theory (ANT). More than a singular theory, ANT describes an approach to studying social and technological systems developed by Bruno Latour, Michel Callon, Madeleine Akrich and John Law in the early 1980s.

ANT posits that the social and natural world is best understood as dynamic networks of humans and nonhuman actors. We tend to think of humans as actors with agency, and nonhuman entities as passive things that we use to achieve our objectives. But in ANT, anything that has an effect on anything else is afforded actor status. At least as a starting point, “things” are considered on a level playing field with people.

In our 2023 piece we suggested that ANT, with its focus on framing society and human-technology interactions in terms of dynamic networks where every actor whether human or machine impacts the network, was a useful way of exploring the ways in which AI will impact people, and people will impact AI.

A year on the value of ANT as a framework for exploring AI’s future has become clearer. The critical point when comparing an ANT frame to an evolutionary one is the way in which the ANT framing highlights how AI will progress with and through people’s interactions with it. When viewed as an actor in a network, not a technology in isolation, AI will never be separate from human interventions.

Using the framework

What this means in practice is that humans are shaping AI with intent and our systems are in turn being shaped by AI. The critical point that ANT highlights is that we must be mindful of the checks and balances in the development of the networks and systems we share with AI’s. Arguments for the unfettered, Darwinian development of AI, such as those laid out in Marc Andreesen’s Techno Optimist Manifesto, are dangerous not because AI will follow an evolutionary route, but because they risk framing humanity as the passive recipient of the technology rather than active agents in its development.

Looking at the future of AI through the ANT frame highlights how entangled humanity and AI already are. The framework shows how much we need to ensure that AI is a positive actor in every network it touches. We need to be thinking in terms of being good citizens in our networks to ensure we set the right boundaries for AI as it develops.

When we view generative AI through the lens of ANT the interdependence between humans and the technology becomes very clear. The ANT frame also highlights the degree to which AI’s development will be driven bottom up with people shaping every new development for the time being at least. This part is vital because it calls on all of us to get involved to ensure AI develops in ways that are positive. AI is too significant for us to leave its growth to unseen forces.

From a top down evolution driven framing to a bottom up, interdependent model where humans are the vital engine of change. From the evolutionary framework for AI’s future, to an ANT derived framing for AI’s future.

Frames is a monthly newsletter that sheds light on the most important issues concerning business and technology.

Stripe Partners helps technology-led businesses invent better futures. To discuss how we can help you, get in touch.

There is another element in the two models that makes ANT more suitable as a frame to speak about AI. That is the fact that AI is totally dependent on human (created) infrastructure. AI can not only not evolve without human interaction such as users and developers, it also needs large amounts of electrical power and coolant water to be able to ‘exist’. In that sense it is not independent. Only with the ANT framework elements such as data centers, power plants, internet cables, etc can be factored in.