This month's Frame: what is an LLM anyway?

A framework that helps us see the limits of our perception.

The most fascinating thing about Large Language Models is that no one - not even the people who create them - fully understand why they work. LLMs exhibit “emergent behaviour” such as translating text into languages they have not been explicitly exposed to, or originating abstract concepts that didn’t exist in their training data. In the words of Demis Hasabis LLMs are “unreasonably effective for what they are”.

This “emergent behaviour” makes us uneasy for two reasons. First, because an artificially constructed technology should, logically, be possible to deconstruct; a product of computer science should be explainable by computer science.

And second, it makes us uneasy because inexplicability means unpredictability. When you can’t fully explain what something is, it is much harder to forecast the effects it might have. It is this gap in explainability, as much as the speed of innovation itself, that fuels the debate on the risks and impact of AI technology.

At its heart this is a debate between, on one side, people who believe that science is ultimately capable of explaining and therefore controlling the technology. And, on the other, people who believe the capability of the technology will continue to outstrip science’s capacity to explain it. So we must focus on its potential effects today.

The framework

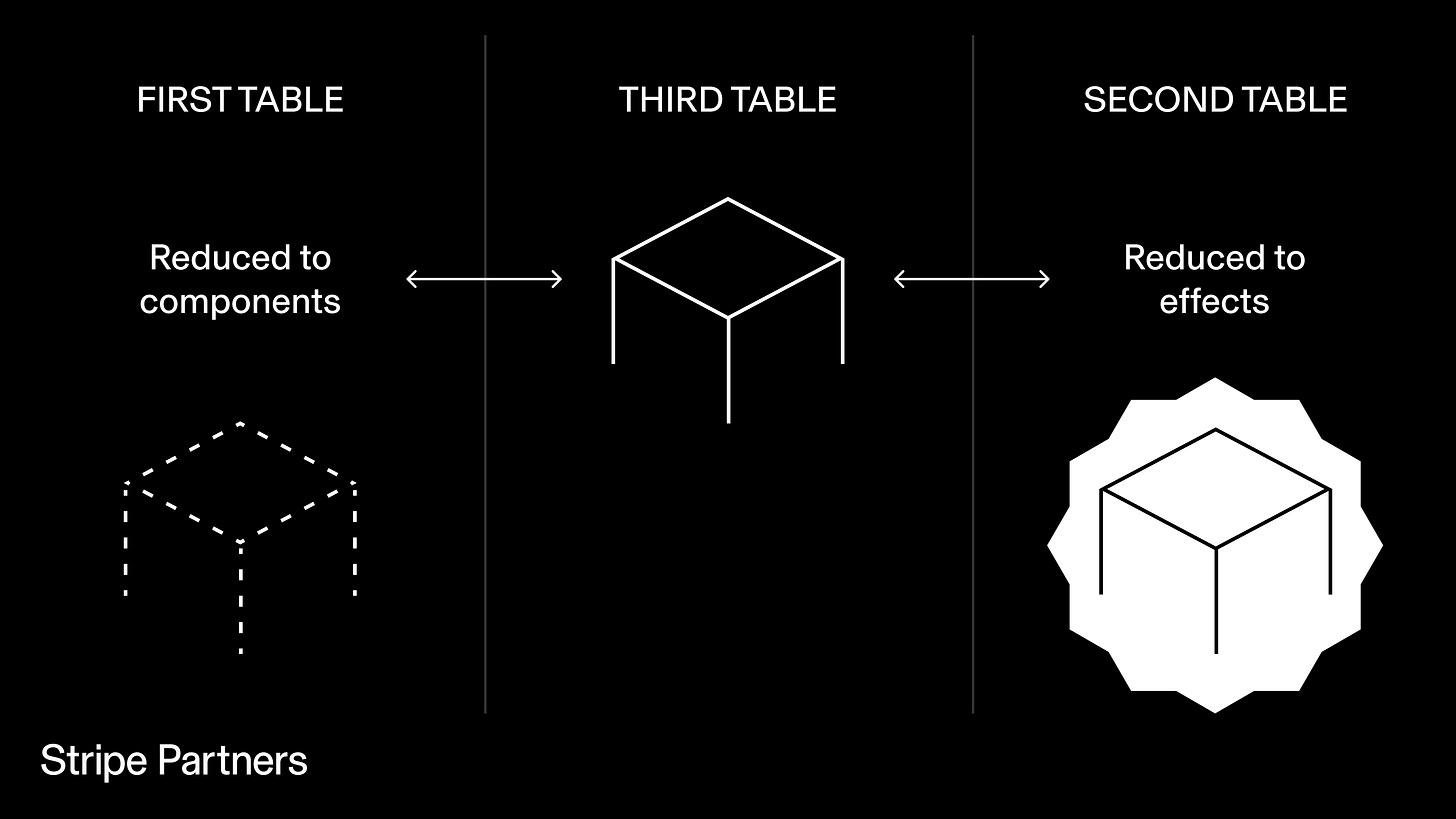

Our tendency to analyse things either through their components or their effects is explored in philosopher Graham Harman’s essay “The Third Table”. In it Harman uses the everyday object of a table to demonstrate our two modes for explaining phenomena: through science or via the humanities.

A scientist “reduces the table downward to tiny particles invisible to the eye”. For scientists, the real table is explained by physics as a series of electric charges, quarks and other tiny elements.

A humanist, on the other hand, “reduces [the table] upward to a series of effects on people and other things.” Here, the real table is the one that people experience and use in everyday life: to sit around, to work, to eat meals.

Harman argues that both these explanations are reductive. The scientific explanation does not account for the autonomous, lived reality that gives the table its “tableness”. And the humanist explanation misses something equally critical. The reality of the table cannot be fully explained by our relation to it. We can encounter aspects of the table, but our limited human senses cannot exhaust its depths as an object.

The real table is, in fact, the “Third Table” that exists independently of these first two explanations. “Our third table emerges as something distinct from its own components and also withdraws behind all its external effects. Our table is an intermediate being found neither in subatomic physics nor in human psychology, but in a permanent autonomous zone where objects are simply themselves.”

For Harman, we may be able to catch glimpses of the real table, but we are incapable of fully knowing it.

Using the framework

What does it mean to admit that LLMs, like tables, are fundamentally unknowable?

First as a user we might become more self-aware about what we are interacting with. We acknowledge an LLM is not a tool that is reducible and therefore limited to its components. But we also appreciate that it’s more than the effect it exerts on us. Just because it feels like we’re talking to another person when we interact with an LLM doesn’t mean we should reduce it to that metaphor, or expect that to be the ultimate design goal.

Second, by accepting that there is no simple explanation for what an LLM is we initiate a new mindset of ongoing discovery. Rather than dismissing unexpected responses as errors, we encounter these as curious artefacts that could lead to more creative, valuable outcomes.

Third, we become more conscious of the role of context in co-creating the outputs of LLMs. Rather than reducing them to predictable, repeatable algorithms we reflect on our own role in what they produce, and the impact of the other systems and entities they interact with. Following Harman, the outputs of LLMs can be independent objects in themselves, and therefore separate from the models that generate them.

Fourth, our humility towards the hidden depths of LLMs means we remain cautious in how they are used, especially as independent agents. Their lack of precision means we always verify their outputs when they operate autonomously (where verification is important). We accept that an AI can never be fully aligned with its user. The concept of full alignment is, in fact, a category error.

Perhaps the real benefit of LLMs, then, is that they teach us to take more responsibility when we use technology. They hold up a mirror to our own imperfections, and in doing so help us realise what “good” really looks like. In the long run we won't experience emergent behaviours as just errors to be feared, explained or ironed out. Rather, they are an expected - even welcome - feature that keeps us accountable, because they remind us that this is a technology we can never fully know.

News

We are hosting an online event on Wednesday 16th October which aims to examine the transformation of GLP-1 agonists from therapeutic agents into cultural artefacts.

We have assembled an exciting panel of experts spanning healthcare policy, history of medicine, and obesity medicine and will be joined by the ILC, who have led pioneering initiatives to measure and promote healthy ageing and prevention both in the UK and globally.

Register your interest on our Eventbrite page.

Frames is a monthly newsletter that sheds light on the most important issues concerning business and technology.

Stripe Partners helps technology-led businesses invent better futures. To discuss how we can help you, get in touch.

Great article, thanks for the thought provocation to kick the day off! "we become more conscious of the role of context in co-creating the outputs of LLMs", this rings very true with my own early encounters with LLMs, I find myself questioning what even am I trying to say to an LLM...