This month's Frame: using EQ to transform AI

A framework for using IQ and EQ to build AI-powered products.

A cardinal sin in the age of AI is usually taken to be the act of anthropomorphising technologies that don’t think, can’t reason and have no model or understanding of the world in which they act.

As we debate the timeline for the arrival of Artificial General Intelligence (AGI), or whether it's even a possibility, a wider framing problem lurks: what do we even mean by intelligence at all. As Benedict Evans recently wrote “we don’t have a coherent theoretical model of what general intelligence really is, nor why people are better at it than dogs.”

If human intelligence is itself inscrutable, evading an agreed, working definition, our progress to AGI has no yardstick. But there’s a more immediate problem. While tens of billions of dollars are poured into the training of bigger, faster, better models (“more tokens”, “more parameters”) a mere fraction of those resources are devoted to imagining what sort of interactions people may want to have with these tools. The reported flatlining of the use of ChatGPT is one ominous outcome of this

What the “AI industry” lacks is a framework for thinking about fundamental questions of AI UI and UX.

As Tom Chatfield reminds us anthropomorphism cuts both ways: we are nothing like machines, and machines are nothing like us and it’s a delusion to think that technology can be understood by analogy to our own capabilities. But what if anthropomorphism has benefits? What if, admittedly incomplete, flawed and contested perspectives on two dimensions of human intelligence, IQ and EQ, might be “good to think with” in the pursuit of designing AI experiences that meet people where they are?

The framework

The idea of Intelligence Quotient (IQ) is, for good reason, highly contested: its tests have been shown to be studiable, there’s no strong evidence historical geniuses had very high IQs, and it often descends into highly politicised debates about its heritability. Yet despite these concerns it has an attractiveness and durability in the popular (and academic) imagination for understanding “raw intellectual horsepower”.

In 1983, Howard Gardner's Frames of Mind: The Theory of Multiple Intelligences popularised the idea that IQ failed to fully explain the full range of human cognitive abilities. He introduced eight intelligence types, including interpersonal intelligence (the capacity to understand the intentions, motivations and desires of other people) and intrapersonal intelligence (the capacity to understand oneself, to appreciate one's feelings, fears and motivations). His work set in train what might be called the EQ (Emotional Quotient) movement, as a counterpoint to the singular intelligence type captured by IQ.

The attractiveness of the idea of EQ means it has multiple definitions. It’s typically understood to include characteristics such as empathy, sensitivity, attunement, understanding, and appreciation of other people’s situations and perspectives. EQ is at heart understood to be a relational form of intelligence that takes other people into account. Equally, people with high EQ have a good sense of what they do, and don’t know.

Yet the AI industry seems to focus on an IQ-centric notion of intelligence. which is evidenced in what Ethan Mollick describes as the “core irony of generative AIs [which] is that AIs were supposed to be all logic and no imagination. Instead, we get AIs that make up information, engage in seemingly emotional discussions”.

In Gen AI solutions especially, hallucinations (when an AI system generates something incorrect or misleading, but presents it as if it were truthful) are not merely a bug but a feature of Generative AI. Their creators admit they are currently powerless to fix this issue. To paraphrase John Wanamaker, when “half the answers I get from Gen AI are false, the trouble is I don’t know which half”—and the narrative framing of these systems is of all-knowing oracles—we have a problem.

These are systems that “want” to help: to give answers where there are none, and return information that is fiction dressed up as fact. It would seem that many AI systems have let their IQ get ahead of their EQ.

Unlike a human agent with some measure of EQ—some awareness of what they do and do not know, the ability to recognise and understand their own thoughts, feelings, and emotions—AI systems are unable to acknowledge the limits of their IQ. They don’t have the EQ to admit their flaws.

More profoundly, they can’t recognise where their users are “coming from”—what they need, why and what helpful might look like. As a result, their formidable IQ has less value. They are unable to make judgements about what users might find useful in specific contexts or use cases.

Using the framework

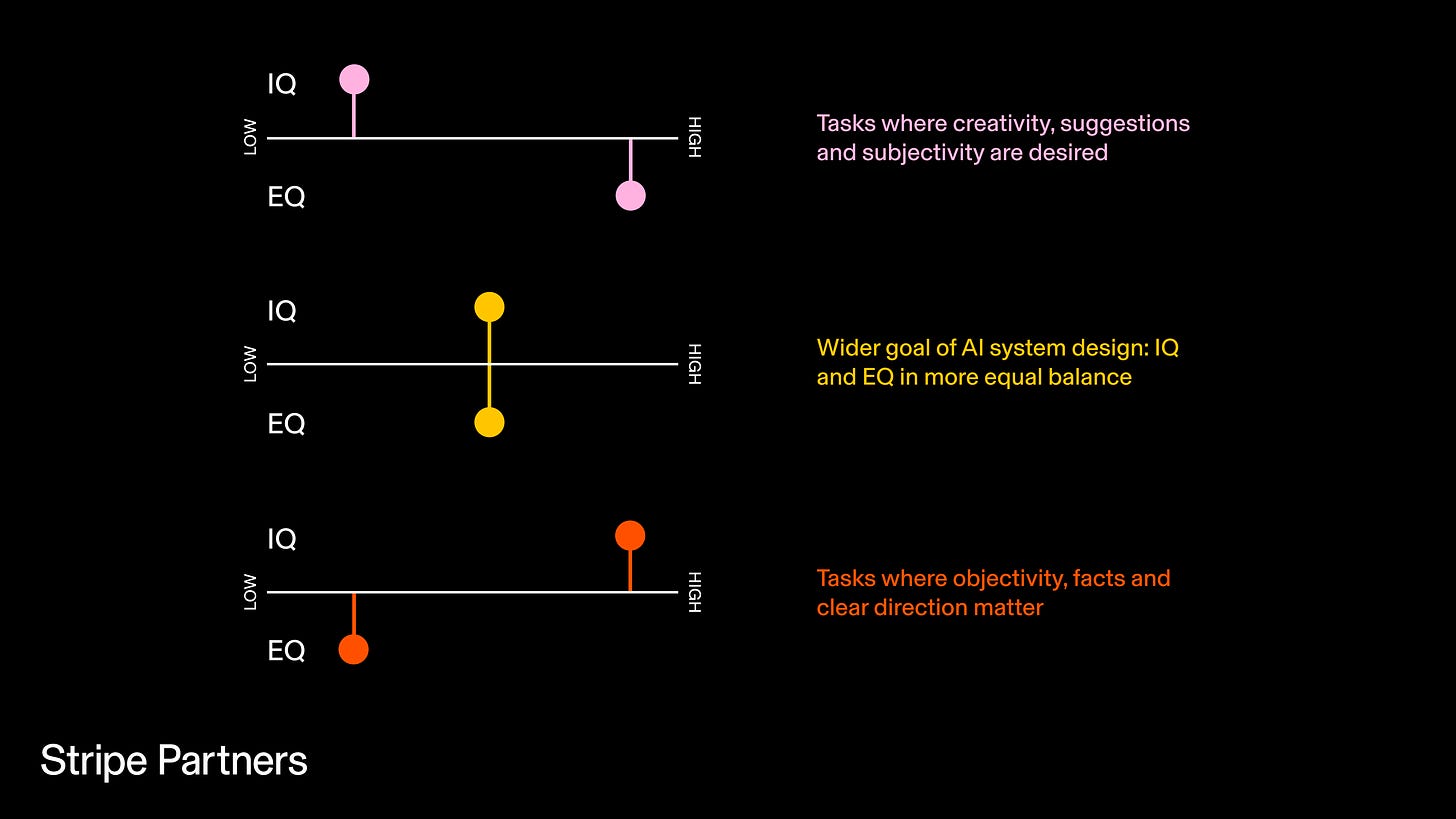

Thinking in terms of IQ and EQ as opposable, but ideally correlated dimensions of intelligent agents, provides a set of concrete “ways in” for AI engineers and product designers to consider.

The IQ-EQ framework can be used at three levels: domain, user and interface.

Domain: How might an AI recognise, and distinguish, between requests in “no right answer” domains (such as restaurant or book recommendations) where there can be no correct response and where subjective opinion is desirable?

Conversely, where a request demands objective facts (eg. a feature comparison between two car models) how might an AI, like someone with high EQ, admit the bounds of their expertise or acknowledge margins of errors?

User: How can an AI system understand dimensions of a user that assist it in configuring what it offers:

What problems is a user trying to solve? Information or inspiration?

Are users seeking clear direction or support through suggestion?

What level of expertise, training or professionalism is someone bringing to a task?

Looking for actually-existing research citations is different from seeking inspiration for what meal leftovers in a fridge could be turned into. An academic seeking citations differs from a child looking for a shortcut. This is the focus of AI alignment which aims to steer AI toward a person's intended goals and preferences.

Interface: If EQ in humans is characterised by an ability to take others into account, then interfaces need to be designed to incorporate domain and user level considerations by incorporating existing knowledge of users and/or providing the means for the interface to elicit more insight into the purposes and positionality of the user.

A reliable, and useful AI, needs to balance IQ and EQ. Those building AI systems need to build mechanisms that allow these two dimensions of intelligence to sit in productive tension in their creations.

News

In the second interview of a series with research leaders that sheds light on how our practice is evolving, SP speaks to Colette Kolenda about her learnings from building and maintaining a high impact team for Spotify.

SP speaks to author Tom Chatfield about his new book Wise animals: How Technology Has Made Us What We Are. The conversation touched on wonder, delusion and hope in the stories we tell about technology.

Frames is a monthly newsletter that sheds light on the most important issues concerning business and technology.

Stripe Partners helps technology-led businesses invent better futures. To discuss how we can help you, get in touch.