This month's Frame: building agency into the relationships between humans and AI

A framework to help product teams design collaborative human-algorithmic interactions.

Today, we live in a world where our interactions with technology are increasingly dominated by machine learning algorithms, which have the unique capacity to learn and adapt in response to ongoing feedback from users.

However, the dominant interaction patterns that govern how product teams design for user behaviour remain stuck in a world where every last element of how a computer system should behave could be predetermined by the engineers building it. And perhaps more insidiously, the idea of designing to avoid “user error” remains at the heart of how many software teams think about building products.

The ideology of “user error” presupposes that users are not intelligent enough to understand and control their machines. It results in information asymmetry between the user and the algorithm, leading teams away from designing for user agency, despite the fact that ML algorithms provide far greater opportunities for users to influence machine output.

This is a mistake. In the absence of information, folk theories flourish. Some are less helpful than others, and can lead people to waste a lot of time trying to influence systems without the ability to achieve their goals. Over time, as frustrations compound, users will look for alternatives that better cater to their needs.

We need new ways to think about designing for interactions between users and their algorithmic agents. In his book “Machine Habitus: Towards a Society of Algorithms” Massimo Airoldi outlines a typology of user-machine interactions that provides a useful framework for re-considering these relationships.

The framework

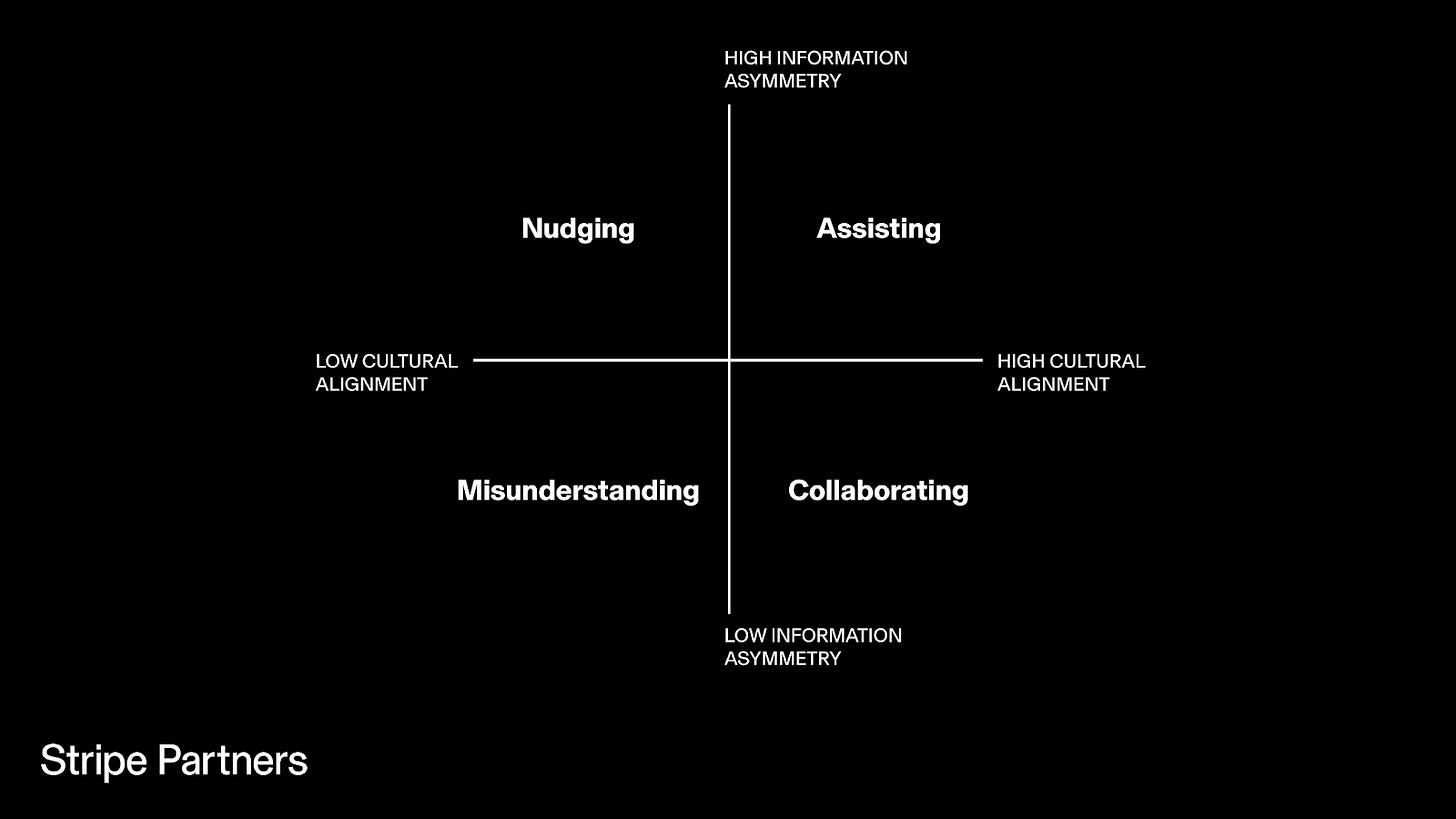

The typology Airoldi identifies is characterised by two factors governing the relationship between user and machine:

The level of information asymmetry ie. does the algorithmic agent know more about the user than the user knows about the functioning of the algorithmic agent?

The cultural alignment ie. to what degree do the values and culture encoded in the machine’s model align with its user?

Airoldi’s framework provides us with 4 typologies of interaction: assisting, nudging, collaborating and misunderstanding.

Assisting—when the information asymmetry and cultural alignment is high, the user is likely to be happy with the algorithmic output even though they lack an understanding of how it came about. An example might be Spotify’s Daily Mix, which seamlessly picks up on and organises the music each user likes into a set of personalised playlists. A downside of this type of interaction can be sameyness, as the algorithmic agent is likely to reinforce pre existing behaviours to ensure alignment with a user’s preferences. Over time, a music listener might find themselves getting bored of listening to the same things over and over again.

Nudging—when information asymmetry is high but cultural alignment is low, the algorithmic agent may start encouraging the users into behaviours and habits that would surprise or displease them. On the positive side, nudging patterns can help break out of the reinforcement that can happen when the agent is assisting, eg. if Spotify were to deliberately include some new music in Discover Weekly that sits outside the user’s established taste patterns. However, the outcomes of nudging can be negative. For example, when a delivery platform’s algorithm consistently incentivises working at a time or place that doesn’t suit the delivery rider.

Misunderstanding—when both information asymmetry and cultural alignment are low, users will be aware that the agent isn’t operating in line with their goals and will likely be motivated to change the system. The algorithmic agents governing delivery platforms that are increasingly subject to worker protest provide a salutary case study. As in this story of an UberEats rider who created the platform UberCheats to demonstrate that the UberEats platform was consistently making errors when calculating delivery distance, resulting in underpaying riders.

Collaboration—when information asymmetry is low but cultural alignment is high, users are empowered to manipulate and reshape the algorithmic agent to suit their desires. Airoldi states this can only be achieved when the user and algorithm creator are one and the same. However, we see evidence that algorithmically literate users are already using the limited tools at their disposal to reshape algorithmic agents to achieve their goals. For example, in recent research with teens we’ve seen a widespread practice of creating multiple different accounts on Instagram in order to tweak the algorithmic recommendations on each one to suit a specific set of interests, although in its current form this practice still relies on teens ability to “game the system” rather than being a true collaboration between equals.

Using the framework

Product teams should stop defaulting to experiences that embrace high information asymmetry and start designing for active collaboration between users and their algorithmic agents. What might these interactions look like if they were consciously designed for, rather than the product of hacks and workarounds?

A first step would be to build explainability into the system, enabling users to develop mental models for how algorithmic agents work and reducing information asymmetry. For example, the variables used to propose a delivery to a delivery driver.

Secondly, teams can design explicit interactions that invite users to adjust the output of their agents to better fit what they want at that moment. For example, Spotify could invite users to adjust how much new music they want to hear in a playlist or provide inputs to give explicit feedback on “more like this” or “less like this”.

Thirdly, products could be designed so that users can directly tweak the parameters of the models. Platforms could provide users with an interface where they can test out changing certain parameters and see how it impacts on their experience, with the ability to wind back to earlier settings where preferred. For example, on Instagram users could play with how important likes, views and dwell time are to their algorithmic recommendations.

On some measures, a collaborative approach to human-algorithmic interactions would mean less efficient or accurate algorithmic encounters. But the space that opens up can create the opportunity for more autonomy, playfulness and perhaps more trustworthy experiences. It is worth experimenting with.

News

Last week, a few members of our Stripe Partners team attended the memorable 20th edition of the EPIC People conference. It was a wonderful opportunity to learn from other colleagues in industry and reflect on our practice together.

Our three papers that were presented at EPIC can be viewed here.

Frames is a monthly newsletter that sheds light on the most important issues concerning business and technology.

Stripe Partners helps technology-led businesses invent better futures. To discuss how we can help you, get in touch.